History Of Semiconductors

In a world increasingly driven by technology, it’s hard to imagine life without the tiny microchip that fuels our devices and sparks groundbreaking innovations.

Microchips have transformed numerous industries, serving as the backbone of modern electronics and computing.

Understanding their monumental impact begins by unraveling the history behind these seemingly minuscule wonders.

The evolution of microchip technology emerged from a landscape dominated by bulky electronic components, grappling with challenges that stifled efficiency and innovation.

The transition to integrated circuits didn’t happen overnight; it was facilitated by key innovators who saw the potential to revolutionize how we build devices and process information.

Jack Kilby and Robert Noyce, two pivotal figures in this era, pushed the boundaries of what was conceivable, igniting a technological transformation.

As we dive deeper into the microchip revolution, we will explore its historical context, the visionaries who shaped its path, and the profound effects it has had on electronics and countless fields of life.

Join us on this journey to uncover how microchips not only redefined technology but also laid the foundation for our high-tech future.

Table of Contents History Of Semiconductors

Historical Context of Microchip Technology

The microchip revolution, a movement that fundamentally altered the digital landscape, is anchored firmly in the innovations introduced by Jack Kilby and Robert Noyce in 1959.

These key engineers independently unveiled the first integrated circuits, setting the stage for an unprecedented transformation in electronics.

Possessed with the vision to see beyond the restraints of contemporary technology, they leveraged the history of semiconductors to redefine the entire circuit paradigm, opening doors to efficiencies and compactness never before imagined.

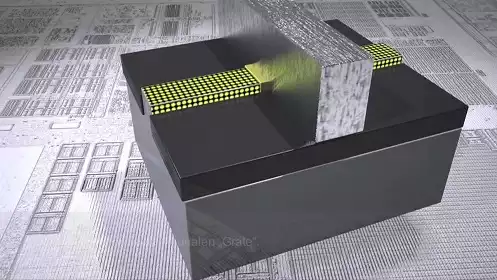

Integrated circuits emerged from necessity—the proverbial mother of invention—as engineers grappled with the ‘tyranny of numbers’: a dilemma highlighting the complexities of connecting a burgeoning number of transistors.

This led to the birth of an electronic component that was not simply a triumph of miniaturization but a radical reimagining.

On the unassuming surface of silicon, a tiny chunk laid the groundwork for what we now recognize as modern microchips, which serve as the cornerstone of electronic circuits and, by extension, modern life.

From the planar process conceived by Jean Hoerni to the process prowess of Texas Instruments, which birthed the first handheld calculator in 1967, every innovation contributed significantly to the semiconductor industry.

E.

Marshall Wilder, resembling literary protagonists in his hand in shaping the Silicon Valley folklore, was amongst those whose personal histories merged with the grand narrative of an entire generation of firms.

This coalescence of individual engineers, process engineers, and visionary leadership steered the semiconductor industry head-on into the digital revolution, culminating in the transformative commercialization of integrated circuits.

Evolution from Electronic Components

This seismic shift had its roots in the evolution from basic electronic components.

The resistors, capacitors, and inductors—which played crucial roles in regulating electronic signals in earlier devices—were the essential site functionality for predecessors of modern circuity.

Their functions ranged from controlling current flow to connecting signal levels, which were fundamental to the nascent stages of signal processing and power management.

However, it was the advent of the transistor by William Shockley in 1947 that sparked a revolution, fostering an era of electronic signals capable of switching at unprecedented speeds and with a fraction of the heat generation associated with vacuum tubes.

This innovation set the ball rolling for continual advances in semiconductor technology, which converged on the path of miniaturization and integration.

Thereby, it allowed for the forging of complex electronic circuits and the expanded functionality of circuit boards previously confined by the use of discrete components.

Challenges in the Pre-Microchip Era

Prior to the entrance of the microchip, the landscape of electronics was fraught with challenges.

Engineers faced a Sisyphean task as the prevalent use of discrete electronic components contributed to sizeable circuit boards unable to transcend certain spatial limitations.

Valves, which once pulsated with the lifeblood of electronic circuits, became relics of inefficiency in the modern era, burdened by weight and a voracious appetite for power.

The increasing demand for more horsepower in computational capabilities only exacerbated the issues, introducing the electronic bottleneck widely recognized as The Tyranny of Numbers.

Engineers at the time ruminated on a future that seemed encased within the restrictive confines of physical wiring—how could the promise of powerful computers and reduced size of computers reconcile with the then-current technological offerings?

As it turns out, challenges are often the catalysts for breakthroughs.

In this charged atmosphere, the integrated circuit materialized as a beacon of hope.

It was not only an answer to the practical conundrum faced by engineers but also a testament to humanity’s relentless pursuit of improvement.

The ensuing shift did more than just set the course for careers in microelectronics; it signaled the dawn of an age where the entire circuit could fit on the tip of a finger, changing the very beat of modernity.

In this history, more than just observing the development of powerful computers and the minuscule size of circuitry, we pay homage to the collective and individual engineers who, like Bauer E.

Marshall Wilder and Robert Noyce, shaped what would eventually become Integrated Device Technology.

These oral histories, etched into the fabric of technological evolution, underscore the microchip’s status as not just a physical object but as a monument to human ingenuity and enterprise—a testament to the transformative power of attention to people and process in engineering.

Key Innovators in Microchip Development

The transformation from bulky electronic circuits laden with individual components to the sleek, ever-miniaturizing world of microchips can be attributed to a cadre of brilliant innovators whose groundbreaking work propelled us into the digital age.

At the heart of this metamorphosis was a pivotal transition from the cumbersome vacuum tube era to an era dominated by the transistor, which was conceived at Bell Labs in 1947.

This revolutionary component set the stage for the relentless march towards miniaturization that would spawn the development of microchips.

It is an incontrovertible truth that without the transistor, the modern microchip as we know it would simply not exist.

The stage having been set by the advent of transistors, Jack Kilby at Texas Instruments took a bold step forward in 1958 by creating the first integrated circuit, crafting an essential site functionality that would become a cornerstone of modern microchip design.

Close on his heels, Robert Noyce independently formulated a more practical version of the integrated circuit in 1959.

His approach facilitated mass production, thus accelerating the ascent of microchip technology.

The leap from a myriad of discrete components to the seamless realm of integrated circuits signifies a profound milestone in the annals of electronic evolution — it heralded compact and efficient microchips that are now indispensable to today’s technology.

The innovations of these key engineers did not merely advance the field of electronics; they reshaped our society, forging new career paths in microelectronics and spawning powerful computers that drastically reduced the size of computers previously imagined.

Jack Kilby’s Contributions

Jack Kilby, during his tenure at Texas Instruments, etched his name into the annals of history by inventing the first integrated circuit in 1958.

This tour de force in circuit design catapulted circuit boards into a new era and was promptly seen as an asset even to the U.

S.

Air Force due to its potential military applications.

Kilby’s pioneering integrated circuit, although hampered by the need for gold wires to make component connections, proved this concept’s utility and ushered in an evolution of microchip design.

Despite the complexities of his initial design, Kilby’s foresight laid a robust foundation for future miniaturized electronics.

His contributions to simplified, more accessible circuits soon evolved into the microchips prevalent now in virtually every modern device.

Rightly so, Kilby was honored with the Nobel Prize in Physics, a just tribute to a man whose seminal work stands as a quintessential pillar of the Information Age.

Robert Noyce’s Innovations

Where Kilby enabled the initial conceptual leap, Robert Noyce revolutionized the practical application and production of microchips.

Noyce’s invention of the monolithic integrated circuit in 1959, alongside a team of talented engineers, marked a transformation within the semiconductor industry, by merging all components onto a single die — an advancement that was nothing short of a feat.

Noyce’s monolithic integrated circuit streamlined the manufacturing process like never before, introducing the capability to fabricate entire devices in a single production sweep.

This meant cheaper production costs and galvanized the possibility of mass-producing these integral building blocks of modern electronics.

Noyce’s groundbreaking work was further augmented by Jean Hoerni’s planar process prowess, which enhanced the manufacturability of integrated circuits.

Moreover, the inclusion of on-chip aluminum interconnections within Noyce’s designs was a masterstroke, bolstering the reliability and efficiency of these monolithic integrated circuits.

Robert Noyce, through his relentless innovation, didn’t just contribute to the electronics sphere — his work underpinned the very foundation of Silicon Valley and the seismic growth of the digital revolution.

The Shift from Discrete Components to Integrated Circuits

The electronics landscape before the microchip was like a puzzle with countless pieces – resistors, capacitors, and valves cluttered circuit boards, each playing a crucial role but also contributing to a complex tangle of limitations.

A monumental shift was on the horizon, one that was needed to overcome the challenges of size, power consumption, and maintenance that were inherent in the discrete components of the day.

The burgeoning demands of the technology world called for innovation that could shrink the expansive sprawl of these electrical components into a more manageable, efficient package.

This call was answered by the advent of integrated circuits.

Integrated circuits, or microchips as we commonly know them, emerged as nothing short of a revolution.

They represented a quantum leap from their bulky, energy-guzzling predecessors, enabling the creation of smaller, more powerful, and much more reliable electronic devices.

By condensing the complex labyrinth of interconnections and components into a singular, tiny wafer, integrated circuits didn’t just tweak the existing design—they completely transformed it.

This paradigm shift has since been tightly woven into the fabric of all advanced electronic devices, permanently altering the technological landscape.

Limitations of Early Electronic Components

The pre-microchip era saw electronic enthusiasts engaging with discrete components for do-it-yourself projects; however, these components, while accessible, were also the shackles that bound the potential of electronics.

The bulky nature of resistors, capacitors, and especially valves, which were critical for amplifying electronic signals, meant that creating compact, portable devices was more a dream than reality.

Valves were particularly cumbersome with their heavy weight and voracious appetite for power, causing numerous headaches in maintenance and integration.

The demand for compactness was at odds with the reality of the components available, imposing significant constraints that stifled technological advancement.

The inefficacy of these discrete components was clear—they were a stopgap, a temporary solution that could not keep pace with the fast-evolving demands of electronic innovation.

It was becoming increasingly evident that the next leap in electronics would require a radical reimagining of component design, one that prioritized miniaturization and efficiency at its core.

The Role of Transistors in Microchip Development

The discovery of the semiconductor transistor marked the beginning of an era, setting in motion the trend toward the miniaturization of electronic components.

With the invention of the transistor, devices could become more energy-efficient, and circuit designs grew in complexity and reliability, charting a course away from the ungainly discrete components of earlier designs.

However, as the transistor began to take its central role in electronic design, a new challenge emerged: the manual assembly and wiring of these components was a bottleneck, precluding further scaling of complexity.

Engineers were all too familiar with what came to be known as the “Tyranny of Numbers” – the daunting task of managing the exponential increase in wiring as more transistors were added to circuits.

The quest for a solution was palpable, and it was met with the Monolithic Idea, independently conceptualized by Jack Kilby and Robert Noyce.

Their vision of integrating an entire circuit’s components onto a single wafer of semiconductor material fundamentally resolved the wiring tyranny, setting the stage for the complex, sophisticated electronics we take for granted today.

Their pioneering work laid the foundation for an industry that would continue to grow, driven by the relentless pursuit of process prowess and a commitment to the efficiency, scalability, and functionality that are essential to modern microchip technology.

Impact of Microchips on Electronics and Computing

The creation of the transistor at Bell Labs in 1947 thundered the electronic world into a new era, signaling an end to the tyranny of cumbersome vacuum tube technology and kickstarting the relentless drive towards miniaturization.

This seismic shift laid the critical groundwork for the invention that would transform technology: the microchip.

When Jack Kilby at Texas Instruments crafted the first integrated circuit in 1958, he wasn’t just tinkering with silicon; he was crafting the future as we know it.

His innovation consolidated disparate electronic components into a unified whole, marking a foundational moment in the ascent of modern microchip technology.

Simultaneously, without knowledge of Kilby’s work, Robert Noyce conceived a parallel invention—a monolithic integrated circuit in 1959.

His design was poised for mass production and became integral to catapulting the microchip into mainstream production.

The ingenuity of these two pioneers laid a path that the nascent semiconductor industry would traverse to revolutionize electronics and computing.

Later, the advent of high-speed CMOS technology, despite initial resistance from titans like Intel and AMD, was crucial in pushing the performance of semiconductors beyond previous boundaries.

The transformation from individual, discrete components to elegant, compact integrated circuits heralded a technological renaissance.

It set into motion a new digital age marked by exponential advancements in processing power, shrinking computers from room-sized behemoths to palm-sized marvels and driving the gears of the digital revolution.

Early Adoption by Pioneering Organizations

One of the earliest and most significant adopters of microchip technology was NASA, which, between 1961 and 1965, leaned heavily on this nascent technology to drive their missions to space.

This partnership didn’t just propel humanity towards the stars; it cemented microchips’ status as reliable and essential for cutting-edge applications.

Following Kilby’s groundbreaking work, multiple industries quickly recognized the potential of integrated circuits.

From aerospace to consumer electronics, the implications of this compact powerhouse were limitless.

As the wave of innovation spread, Noyce’s scalable monolithic integrated circuit became the cornerstone of widespread adoption, making the vast potential of microchip technology a tangible reality.

Then came the Intel 4004 in 1971 — the world’s first commercially available microprocessor.

It wasn’t merely a triumph in engineering; it was a demonstration of microchips’ multifaceted utility across countless sectors.

The impact was rapid and far-reaching, solidifying microchips as the linchpin of modern technology.

By 1984, the Adidas Micropacer further illustrated microchips’ versatility, embedding sophisticated tracking technology into sports footwear, proving these circuits were not confined to traditional computing devices alone.

Overcoming Challenges in Reliability and Manufacturing

Before the microchip, the electronic landscape was littered with hand-built valves, intricate in their design, yet flawed in their miniaturization.

Shrinking these valves down shifted their electrical properties, presenting a roadblock to advancement.

The world lacked machinery capable of precision on such a diminutive scale, underlining the urgent need for a revolution in circuit manufacturing.

Moreover, maintaining a vacuum in these miniature valves to ensure their operation was a task fraught with technical challenges.

It was clear that the reliability and efficiency of early electronic designs needed a radical overhaul to meet the demands of sectors such as aerospace and the military, where precision was non-negotiable.

The epochal entrée of the integrated circuit proved to be a panacea.

What once required laborious, manual wiring transformed into the production of monolithic units—a quantum leap in manufacturing efficiency and circuit reliability.

This tectonic shift in how electrical circuits were made mirrored the ambitious spirit of an industry always leaning into the future—a future meticulously crafted by integrated circuits nestled within the heart of our most powerful computers and the most mundane everyday devices.

In presenting the undeniable impact of the microchip, one not only appreciates the history of a revolutionary component but the vision of the minds behind it.

After all, it’s these pivotal moments in the history of semiconductors, the dedication of key engineers like Jack Kilby and Robert Noyce, and the pioneering drive of early adopters that frame the very panorama of our modern life, touching upon careers in microelectronics and beyond.

The microchip revolution is not just a chapter in the annals of technology, it is the undercurrent of an ever-evolving digital society.

The Legacy of the Microchip Revolution

The Microchip Revolution stands as one of humanity’s great leaps in technology, sowing the seeds for a future rich with digital possibility.

It’s a tale of vision, toil, and ingenuity, starting with Jack Kilby’s development of the integrated circuit in 1958—a creation that presaged the intertwined destinies of electronic circuits and human progress.

His triumph, followed swiftly by Robert Noyce’s invention of the monolithic integrated circuit in 1959, revolutionized the process of mass production for microchips and made them a mainstay across myriad technologies.

As monumental as these achievements were, they were made possible by the earlier transition from vacuum tube technology to the transistor in 1947.

This was the essential step in technology that allowed the miniaturization of electronic devices to begin, setting the stage for the dazzling innovations in microchip technology.

The historical narrative of the microchip is one of cascading breakthroughs, each refining the tapestry of modern electronics and casting a wide influence over everyday life and the ever-evolving digital landscape.

The enduring journey of the microchip encapsulates a narrative of rigor and relentless innovation, underscoring the enduring effects of a pivotal chapter in our technological narrative.

Influence on Modern Technology

In tracing the lineage of contemporary computing, it is essential to acknowledge the crucial role of microprocessors, starting with the groundbreaking 4004 and marching forward to the powerful 8086.

These components lie at the heart of the x86 architecture, a cornerstone still dominating personal computers across the globe.

Microprocessors have unlocked untold possibilities, giving rise to smaller, more powerful computing devices and spurring a personal computing revolution that permeates every aspect of daily life.

Today, modern microchips, teeming with billions of transistors, demonstrate the leaps and bounds made in semiconductor fabrication from their early ancestors.

Thanks to the march of miniaturization, prophesied by Moore’s Law, we have witnessed the evolution of devices becoming progressively compact, powerful, and energy-efficient—a trend that continues to redefine modern technology.

Transformations Across Various Fields

The proliferation of microchips ignited a new epoch in electronics; they became the bedrock upon which countless electronic devices and systems are fashioned.

This development fueled an explosive growth in the electronics industry, giving rise to a new generation of firms dedicated to harnessing the potential of microchips.

Industry stalwarts like Texas Instruments and Intel became household names, evangelizing the integrated circuit and fostering a climate of fierce innovation and competition.

Timers such as the iconic 555 timer integrated circuit emerged as ubiquitous building blocks that showcased the unmatched versatility and reliability of microchips in consumer electronics.

As we look back on this storied industry, one finds an abundance of personal and oral histories that flesh out both the technical wizardry and the strategic acumen that drove the meteoric rise of microelectronics—an industry whose history is as rich and intricate as the devices it has helped produce.

In dissecting the impact of the microchip revolution, one does not merely recount advancements in technology nor chronicle the careers of individual engineers.

It is to tell the story of an essential site functionality in our modern era—a narrative infused with the pursuit of electronic signals that would come to underpin every facet of modern life.

Influence on Modern Technology

The Microchip Revolution has precipitated an extraordinary impact on modern technology, effectively shaping every facet of contemporary life.

At the heart lies the pioneering 8086 processor, which set the stage for the x86 architecture, maintaining its dominance in today’s personal computer market.

The advent of microprocessors marked a pivotal shift, heralding an era of dramatically smaller, robust, and adaptable computing devices, instrumental to the personal computing revolution.

Acknowledging the footprint of historical milestones like the 4004 microprocessor and its successors, we witness their legacy in the plethora of digital devices integral to our daily routine.

Today’s microchips are technical marvels, accommodating billions of transistors, a testament to the astounding progress in semiconductor fabrication since the initial integrated circuits.

A relentless march towards miniaturization, guided by Moore’s Law, continues to bestow on us devices that are increasingly compact, yet exponentially more powerful and efficient.

This trend underpins the seismic shift towards an era of convenience and unimagined computational prowess, with the microchip at its core forever revolutionizing the very fabric of modern technology.